“Long before the first robots or computers, ancient cultures dreamed of intelligent machines and automata. From mythical creatures to ingenious devices, the concept of Artificial Intelligence has been around for thousands of years. From ancient Greece to China, Egypt, and Greece, the quest for creating life-like beings has been a recurring theme. Join us on a fascinating journey to explore the ancient roots of AI, and discover how these early ideas paved the way for the intelligent machines of today.”HISTORY OF AI

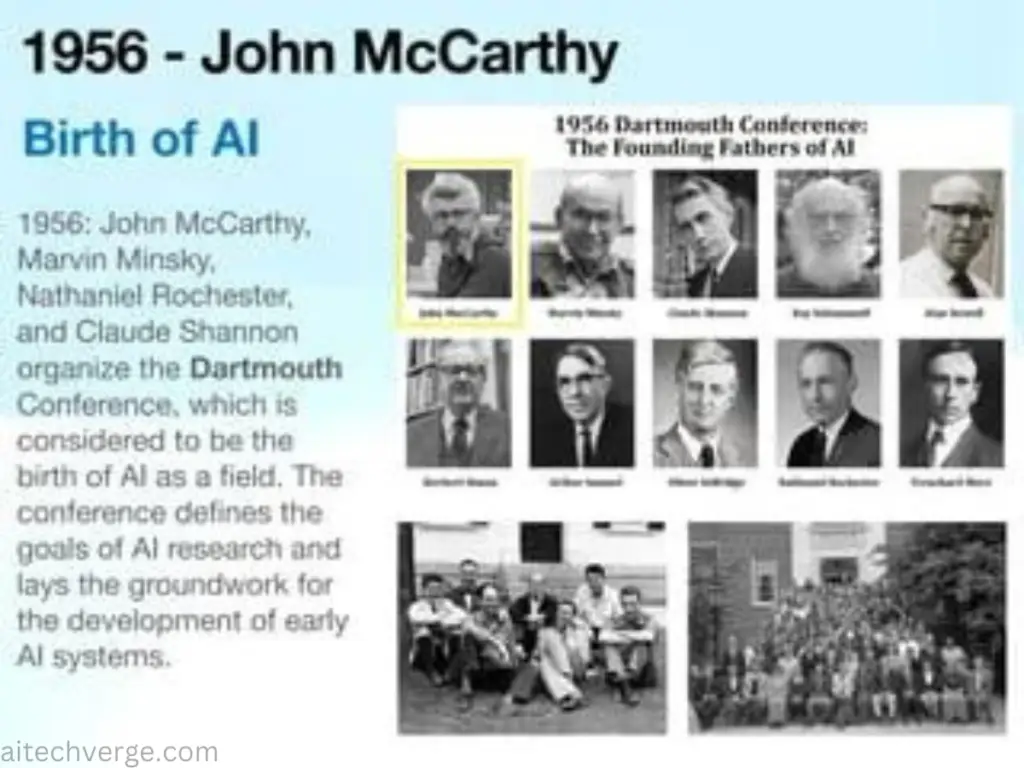

The 1956 Dartmouth Conference: The Birthplace of Modern AI

In the summer of 1956, a group of visionary scientists gathered at Dartmouth College in Hanover, New Hampshire. Led by John McCarthy, Marvin Minsky, Nathaniel Rochester, and Claude Shannon, this pioneering team shared a bold vision: to create machines that could think and learn like humans. This historic conference marked the official launch of the field of Artificial Intelligence.HISTORY OF AI

Imagine the excitement in the air as these brilliant minds debated and brainstormed together. They drew inspiration from the work of Alan Turing, who had proposed the Turing Test just five years earlier. The Dartmouth group aimed to push the boundaries of what was thought possible with machines.HISTORY OF AI

One attendee, John McCarthy, later recalled: “We were all convinced that we were on the threshold of a breakthrough. We felt like we were about to unlock the secrets of the universe.”HISTORY OF AI

Some notable outcomes from this conference include:

Coining the term “Artificial Intelligence” (AI) by John McCarthy

Proposing the idea of the “logical theorist” – a machine that could reason and solve problems like a human

Laying the groundwork for the development of the first AI program, which would be created just a year later

Alan Turing’s Proposal: The Turing Test

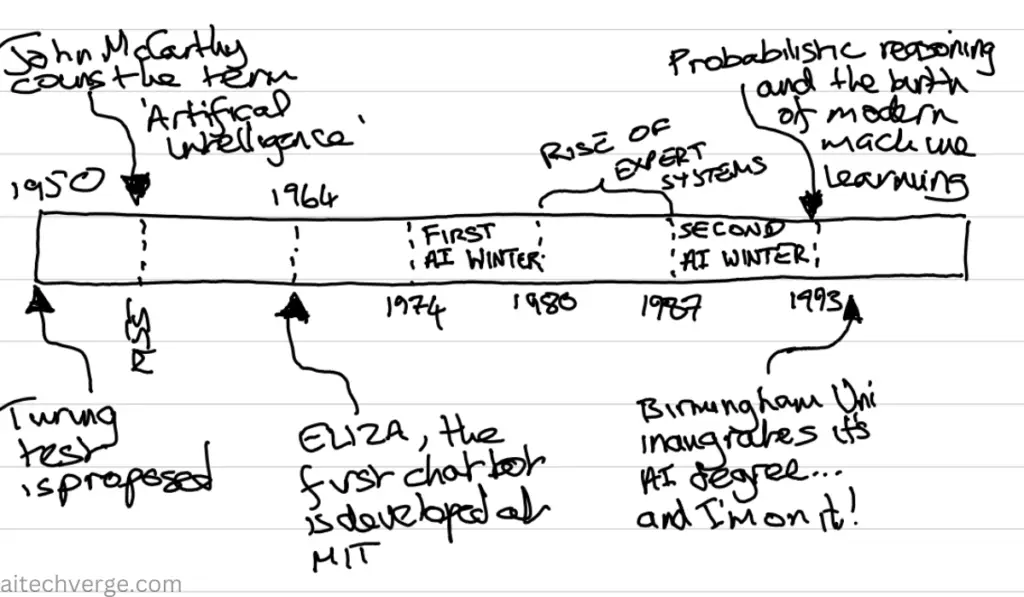

In 1950, British mathematician and computer scientist Alan Turing asked a simple yet profound question: “Can machines think?” To answer this, he proposed a revolutionary idea: the Turing Test. This test would evaluate a machine’s ability to exhibit intelligent behavior equivalent to, or indistinguishable from, that of a human.HISTORY OF AI

Imagine yourself in a conversation with a friend, laughing and chatting about your favorite TV show. Now, imagine that same conversation with a machine. Could you tell the difference? That’s what Turing wanted to explore.HISTORY OF AI

The Turing Test consists of three players:

- A human evaluator

- A human conversational partner

- A machine (or computer program)

The evaluator engages in natural language conversations with both the human and the machine, without knowing which is which. If the evaluator cannot reliably distinguish the machine from the human, the machine is said to have passed the Turing Test.HISTORY OF AI

Turing’s proposal was groundbreaking, as it shifted the focus from machines’ computational powers to their ability to mimic human thought processes. His work laid the foundation for the development of chatbots, virtual assistants, and other AI applications that interact with humans more naturally.HISTORY OF AI

For example, in 2014, a chatbot named “Eugene Goostman” convinced 33% of human evaluators that it was a 13-year-old boy, demonstrating remarkable progress in conversational AI.HISTORY OF AI

Turing’s legacy extends far beyond the Turing Test. His work on codebreaking during World War II and his contributions to computer science and logic have inspired generations of researchers and scientists.HISTORY OF AI

The Birth of AI Programs: Logical Theorist and Beyond

In 1956, the first AI program, the Logical Theorist, was born. Developed by Allen Newell and Herbert Simon, this pioneering program was designed to simulate human problem-solving abilities. It marked the beginning of a new era in AI research, as scientists started building machines that could think and reason.HISTORY OF AI

Imagine being part of a team that creates a machine that can solve problems and learn from its mistakes, just like you do. That’s what Newell and Simon experienced as they developed the Logical Theorist.HISTORY OF AI

This program was designed to simulate human problem-solving by:

- Reasoning logically

- Solving problems step-by-step

- Learning from experience

The Logical Theorist was a groundbreaking achievement, demonstrating that machines could be programmed to think and reason like humans. This innovation paved the way for the development of more advanced AI programs.HISTORY OF AI

Other notable early AI programs include:

- ELIZA (1966): A chatbot that could simulate a conversation, developed by Joseph Weizenbaum

- MYCIN (1976): An expert system that could diagnose and treat bacterial infections, developed by Edward Feigenbaum and his team

These early programs may seem simple compared to today’s AI systems, but they laid the foundation for the intelligent machines we use and interact with daily.HISTORY OF AI

Mimicking Human Decision-Making: The Rise of Expert Systems

In the 1970s and 1980s, AI researchers aimed to create systems that could mimic human decision-making. They developed Expert Systems, designed to replicate the problem-solving abilities of human experts in specific domains.HISTORY OF AI

Imagine having a seasoned doctor or a skilled engineer by your side, guiding you through complex decisions. That’s what Expert Systems aimed to provide – a way to capture human expertise and make it accessible to others.HISTORY OF AI

These systems consisted of:

Knowledge base: A repository of expert knowledge and rules

Inference engine: A program that applies the rules to solve problems

User interface: A way for users to interact with the system

Expert Systems were widely adopted in various fields, including:

Medicine: MYCIN (1976) helped diagnose and treat bacterial infections

Engineering: CADUCEUS (1984) assisted in designing and troubleshooting complex systems

Finance: R1 (1983) helped manage credit risk and approve loans

These systems revolutionized decision-making processes, making it possible for non-experts to access expert-level knowledge and guidance.

For example, MYCIN’s developer, Edward Feigenbaum, recalled: “We were amazed when MYCIN outperformed human experts in diagnosing certain infections. It was a turning point in AI research.”HISTORY OF AI

AI Winter: The Funding Slump and Reduced Interest

By the late 1980s, the excitement surrounding AI’s potential had worn off, and the field experienced a significant decline in funding and interest. This period, known as the “AI Winter,” lasted for several years and had a profound impact on the research community.HISTORY OF AI

Imagine dedicating your career to a field that suddenly seems abandoned by the rest of the world. That’s what many AI researchers experienced during this challenging period.HISTORY OF AI

The causes of the AI Winter were varied:

Overpromising and underdelivering: Early AI systems failed to meet expectations, leading to disillusionment.

Lack of understanding: The complexity of human intelligence made it difficult to replicate with machines.

Funding cuts: Government and corporate funding dwindled, forcing researchers to seek alternative sources.

Real-life examples of the AI Winter’s impact include:

Reduced research grants: Many projects were put on hold or canceled due to lack of funding.

Brain drain: Talented researchers left the field, seeking more stable career opportunities.

Discontinued projects: Promising AI initiatives, like the Japanese Fifth Generation Computer Systems project, were abandoned.

Despite the challenges, a dedicated group of researchers persisted, driven by their passion for AI’s potential. Their work, though often unrecognized at the time, laid the foundation for the AI resurgence in the 1990s.HISTORY OF AI

AI Resurgence: Revival of Expert Systems and New Approaches

By the mid-1990s, the AI Winter began to thaw, and the field experienced a resurgence of interest and innovation. Expert systems once considered a failed promise, were revived with new technologies and approaches. This revival also saw the emergence of new AI subfields, which would shape the industry’s future.HISTORY OF AI

Imagine being part of a community that had weathered the storm, only to discover new tools and techniques that rekindled the excitement and promise of AI research.HISTORY OF AI

The revival was fueled by:

- Advances in computing power and data storage

- New machine learning algorithms, like decision trees and neural networks

- The rise of the Internet and access to vast amounts of data

Real-life examples of this resurgence include:

- Expert system revival: New tools like CLIPS (1995) and JESS (1997) enabled more efficient development and deployment.

- AI applications in finance: Systems like DoubleCAD (1996) helped detect fraud and analyze financial data.

- Speech recognition: Dragon Systems’ NaturallySpeaking (1997) allowed users to interact with computers using voice commands.

Researchers like David Ferrucci, who developed the IBM Watson question-answering system, recalled: “We were driven by the desire to create something truly intelligent. The resurgence of AI gave us the tools and confidence to pursue our dreams.”

AI Breakthroughs: Machine Learning, NLP, and Computer Vision

(NLP), and computer vision. These breakthroughs enabled AI systems to learn from data, understand human language, and interpret visual information, revolutionizing numerous industries and aspects of our lives.

Imagine being able to communicate with machines in your language, or having a self-driving car navigate through busy streets. These innovations made it possible.

Machine learning:

- Google’s AlphaGo (2016) defeated a human world champion in Go, a game thought to be unwinnable by machines.

- Netflix’s recommendation algorithm (2006) transformed the way we discover new movies and TV shows.

Natural Language Processing (NLP):

- Siri (2011) and Alexa (2014) brought voice assistants into our homes and pockets.

- IBM Watson’s question-answering system (2007) enabled machines to understand and respond to human queries.

Computer Vision:

- Self-driving cars like Waymo (2009) and Tesla Autopilot (2015) began navigating roads and changing the transportation landscape.

- Facebook’s facial recognition technology (2010) made tagging friends in photos easier than ever.

Researchers like Yann LeCun, director of AI Research at Facebook, reflected: “We’ve made tremendous progress in AI, but we’re just getting started. The future holds endless possibilities.”

AI in Our Lives: Virtual Assistants, Self-Driving Cars, and Beyond

AI has transformed from a futuristic concept to a tangible presence in our daily lives. Virtual assistants, self-driving cars, and various AI-powered applications have become an integral part of our routines, simplifying tasks, and enhancing experiences.

Imagine having a personal assistant like Amazon’s Alexa or Google Assistant, always ready to help, or enjoying a safe and relaxing ride in a self-driving car like Waymo or Tesla. AI has made it possible.

Virtual Assistants:

- Amazon’s Alexa (2014) and Google Assistant (2016) revolutionized smart homes and voice interaction.

- Apple’s Siri (2011) and Microsoft’s Cortana (2014) brought AI-powered assistance to our pockets.

Self-Driving Cars:

- Waymo (2009) and Tesla Autopilot (2015) pioneered autonomous transportation, promising safer roads and enhanced mobility.

AI in Daily Life:

- Fraud detection systems, like those used by PayPal (2002), protect our financial transactions.

- Personalized product recommendations, like those on Amazon (2000), enhance our shopping experiences.

People like Sarah, a busy working mother, appreciate the convenience: “Alexa helps me manage my schedule, play music, and even order groceries. I couldn’t imagine life without her!”

Deep Learning: The Game-Changer in AI Capabilities

The development of deep learning algorithms marked a significant milestone in AI’s history, enabling machines to learn and improve with unprecedented accuracy. Inspired by the human brain, these algorithms have revolutionized AI’s capabilities, driving breakthroughs in image and speech recognition, natural language processing, and more.

Imagine being able to teach a machine to recognize objects, understand speech, or even create art, just like a human. Deep learning made it possible.

Key Deep Learning Algorithms:

Convolutional Neural Networks (CNNs): Excel in image recognition and computer vision tasks.

Recurrent Neural Networks (RNNs): Enable speech recognition, language translation, and text summarization.

Generative Adversarial Networks (GANs): Create realistic images, music, and even entire conversations.

Real-Life Examples:

- Google’s AlphaGo (2016) defeated a human world champion in Go using deep learning.

- Facebook’s facial recognition technology (2010) uses deep learning for accurate identification.

- Amazon’s Alexa and Google Assistant use deep learning for speech recognition and natural language processing.

Researchers like Andrew Ng, a pioneer in deep learning, reflected: “Deep learning has transformed AI research, enabling machines to learn like humans. The potential is vast, and we’re just getting started.”

AI’s Future: Trends, Applications, and Ethical Considerations

As AI continues to evolve, it’s essential to explore current trends, potential applications, and the ethical considerations surrounding its development. From healthcare to education, AI has the potential to transform industries and improve lives. However, it also raises important questions about bias, privacy, and accountability.

Current Trends:

Explainable AI (XAI): Developing AI that can explain its decisions and actions.

Edge AI: Processing data closer to the source, reducing latency and improving real-time decision-making.

Transfer Learning: Enabling AI models to learn from one domain and apply to another.

Potential Applications:

Personalized medicine: AI can help tailor treatments to individual patients’ needs.

Intelligent tutoring systems: AI can enhance student learning and teacher support.

Autonomous robots: AI can improve manufacturing, logistics, and healthcare.

Ethical Considerations:

Bias and discrimination: AI systems can perpetuate existing social inequalities.

Privacy: AI systems often rely on vast amounts of personal data.

Accountability: Who is responsible when AI systems make mistakes?

Real-Life Examples:

- IBM’s Watson for Oncology helps doctors identify personalized cancer treatments.

- Google’s AI-powered education platform, Google Classroom, supports teachers and students.

- Microsoft’s AI-powered chatbot, Zo, aims to improve mental health support.

Experts like Dr. Kate Crawford, a leading AI ethicist, emphasize: “AI has tremendous potential, but we must address the ethical implications proactively. It’s a human responsibility to ensure AI benefits everyone, not just a select few.”